How to Summarize Text Using Machine Learning and Python?

The ability to extract significant insights and efficiently summarize enormous volumes of textual data is critical in today’s digital world. Machine Learning techniques, combined with the flexibility and power of the Python programming language, provide a viable answer to this problem. ML algorithms can be used to create intelligent systems that can analyze and reduce large materials into brief summaries. This will save time and improve comprehension as well.

This article delves into the fascinating field of text summarizing and provides an overview of the essential concepts and strategies to construct accurate summarization models.

Text summarization

When it comes to summarizing text using machine learning and Python, various Python libraries and machine learning models are available. Each library and model have a different code and accuracy ratio because of how it has been trained. So, for this guide, we’ll use the assistance of an advanced Python framework named Transformers and Facebook’s machine-learning model named BART Large CNN.

But as we’ve specified earlier, various Python libraries and machine learning models are available to automate the task of text summarization.

So, why have we chosen the Transformers and BART Large CNN? Let’s explore the answer to the “why” part before getting to the steps of summarizing text using machine learning and Python.

Text summarization is an example of an advanced use case of Natural Language Processing (NLP). And advanced Python frameworks like Transformers have made it easier to deal with the advanced use cases of NLP. So, that’s the primary reason for choosing the Transformers Python framework here. However, regarding the selection of BART Large CNN is concerned, this machine-learning model has given exceptional results for text summarizing when used with the combination of Transformers. So, that’s the reason for choosing the BART Large CNN machine learning model in this guide.

Steps to Summarize Text Using BART Large CNN and Transformers Python Framework

The BART Large CNN machine learning model is easier to implement for smaller datasets and challenging to handle for larger problems and datasets of bigger sizes. So, let’s see the best way to use this machine learning model along with the selected Python framework (Transformers).

Note: We have listed the following steps assuming you’ve already installed Python in your system. But if you don’t know whether Python is installed in your system or not, we recommend checking its installation status first in the following way:

python --version

# Python 3.11.3- First, install the Transformers framework in Python because it is necessary to achieve advanced NLP tasks. And you can do that by running the following command in the Terminal:

pip install transformers- Upon running the above command, your system will download and install all the files essential for the proper functioning of the Transformers library. And once the system has downloaded and installed all the files related to the ‘Transformers’ library, you will see the following message:

- The next step is to import the necessary classes from the installed ‘Transformers’ library. So, since text summarization is an NLP task, we’ll import the ‘pipeline’ class because it provides a high-level interface for the NLP tasks. And since we want to use the assistance of the BART Large CNN model, we’ll also have to import its classes. So, type the following Python code:

from transformers import pipeline, BartForConditionalGeneration, BartTokenizerNote: If you haven’t installed the necessary packages, the above line of code will give an error message upon executing. So, run following command before trying to import the classes from the installed library.

pip install transformers torch numpy pandas- After importing the classes, it’s time to load the pre-trained BART model and tokenizer from the Hugging Face model hub. So, for that, we’ll use the

from_pretrained()method. And that’s how the complete command for this step will look like:

# Load the pre-trained BART model and tokenizer

model = BartForConditionalGeneration.from_pretrained(‘facebook/bart-large-cnn’)

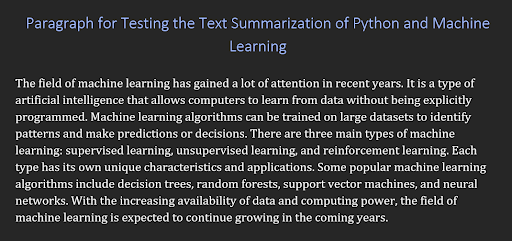

tokenizer = BartTokenizer.from_pretrained(‘facebook/bart-large-cnn’)- In the next step, we’ll input the main text we want to summarize. For this example, we’ve selected the following paragraph about machine learning:

So, the following line represents the code for inputting a complete paragraph in Python:

input_text = “The field of machine learning has gained a lot of attention in recent years. It is a type of artificial intelligence that allows computers to learn from data without being explicitly programmed. Machine learning algorithms can be trained on large datasets to identify patterns and make predictions or decisions. There are three main types of machine learning: supervised learning, unsupervised learning, and reinforcement learning. Each type has its own unique characteristics and applications. Some popular machine learning algorithms include decision trees, random forests, support vector machines, and neural networks. With the increasing availability of data and computing power, the field of machine learning is expected to continue growing in the coming years.”- It’s time to tokenize the input text. So, we’ll use the assistance of a pre-trained BART tokenizer. And here is an example of how the code for this step will look like:

inputs = tokenizer(input_text, max_length=1024, truncation=True, return_tensors='pt')Note: In the above step, we’ve specified the max_length and truncation parameters. These parameters will ensure that if the text’s length exceeds the specified limit, it will automatically be truncated.

- All the prerequisites are done. So, it’s time to generate the summary. And for that, we’ll use the assistance of the

generate()method of the BART Large CNN model. So, the following represents the code for this step:

# Generate the summary

summary_ids = model.generate(inputs[‘input_ids’], num_beams=4, max_length=100, early_stopping=True)- The above line of code will generate the summary of the input. But we still need to decode the generated summary to convert the token IDs back into text. And for that, we’ll use the assistance of the

decode()tokenizer method. So, the following line represents the complete code for this step:

# Decode the summary

summary = tokenizer.decode(summary_ids[0], skip_special_tokens=True)Note: As you can see, we’ve set the skip_special_tokens parameter to True. This instruction will skip or remove any unique tokens added by the tokenizer during encoding.

- Last but not least, we’ll print the generated summary to the screen because it’s essential to show the output. So, here is the code for this step:

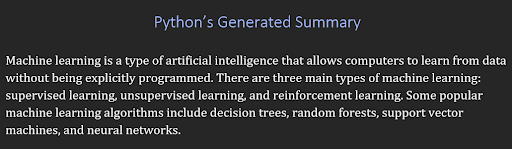

print(summary)Thus, once you’ve performed all the above steps, Python will generate the following output:

As you can see, the generated summary looks accurate and cover all the important key points of the input text. So that’s how you can easily create a text summarizer tool using Python and machine learning. The tool will automatically summarize documents by keeping all the important points in it.

Limitations of BART Large CNN Model

According to the output of the above example, it is clear that the BART Large CNN model is pretty accurate when it comes to text summarization, and the Transformers is among the most accurate Python text summarization libraries. But there are some limitations associated with the BART Large CNN model. So, let’s check out those limitations because this way, you will have a better idea about whether using this machine learning model is an appropriate choice for you or not.

- For starters, the complete size of the BART Large CNN model is around 1.65GB (as of now). So, depending on your internet connection speed, this machine-learning model will take a long time to download.

- This machine-learning model can summarize a text of 1024 tokens in one go, which is equivalent to around 800 words. That’s because both words and punctuations fall into the category of tokens.

- Although it performs incredibly well, the speed of this machine-learning model is quite slow, especially when it runs on the CPU with integrated graphics.

- This model requires a storage and RAM capacity of at least 1.5GB in order to perform text summarization.

Conclusion

Summarizing the text is a handy way of quickly extracting the text. But this technique will only prove helpful if you know the right way to do it. So, if you don’t know how to summarize the text manually, you can use machine learning models and Python libraries to perform accurate text summarizations.

As the above blog post indicates, Python libraries and machine learning models can quickly and accurately generate summaries while preserving the original context. So, you can use the assistance of modern technology to generate text summaries and increase productivity at your work.