Become a Fullstack JavaScript Developer, Part 5: The DevOps

DevOps is quite specialized and often managed by DevOps engineers in your team. You see them working on terminal all the time (it looks cool! right?), sometimes what they’re doing quite gray to you.

- Part 1: The Motivation

- Part 2: The Basics

- Part 3: The Backend

- Part 4: The Frontend

- Part 5: The DevOps (this post)

- Part 6: The Monorepo

Couple years ago, DevOps tasks are quite simple which included only with configuring packages on server. Nowadays it’s more complicated with the rise of container technology, the DevOps engineer tends to code more than ever!

The roadmap for a serious DevOps engineer who is capable of working on a big company is extremely overwhelmed which including broad understanding of tools and technologies:

- Basics (Operating System, Scripting, Networking, Security)

- Source Control (Git, SVN, Mercury, GitHub)

- Continuous Integration (Jenkins, CircleCI, TeamCity, Travis CI, Drone )

- Cloud Provider (AWS, Azure, GoogleCloud, Heroku, Digital Ocean)

- Web Server (Apache, Nginx, IIS, Tomcat, etc )

- Infrastructure as Code (Docker, Ansible, Kubernetes, Terraform, etc)

- Log Management (ELK Stack, Graylog, Splunk, Papertrail, etc)

- Monitoring (Nagios, Icinga, Datadog, Zabbit, Monit)

- (many more)The responsibilities of DevOps engineer include but not limited to: Implement automation pipelines, optimize computing architecture, conduct system tests for security, manage cloud platforms, monitor the system, collaborate with development team, etc.

Twenty to thirty years ago, there was separation between server administrators and developers. Now developers have servers running on their computer inside Docker or a virtual machine. PCs are more powerful and developers are more familiar with how to set up servers.

As a Fullstack Developer, you don’t need to follow the above path for DevOps engineer. Just pick a minimum collection of tools to manage your product initially at a very basic level and improve it over time.

Warning: Following technologies are only my favorite choices to learn DevOps as a JavaScript Fullstack Developer. This is not the recommended path for everyone, you should consider this as starter list for devops development.

Hosting (Ubuntu)

There are many types of hosting services for you to choose: shared web hosting, virtual private server, dedicated hosting, application hosting, etc.

You can use Platform as a Service (PaaS) like Heroku, Now.sh, Netlify to build, run, and manage applications without the complexity of building and maintaining the infrastructure typically associated with developing and launching an app.

I myself prefer using a VPS because I want to gain total control of the server and cost savings. PaaS actually saves your time but will cost you more when scaling, almost every little things will be charged.

A server operating system (server OS) is a type of operating system that is designed to be installed and used on a server computer. It is an advanced version of an operating system, having features and capabilities required within a client-server architecture or similar enterprise computing environment.

Some common examples of server operating systems include CentOS, Fedora, Ubuntu, Debian, FreeBSD, CoreOS or Windows Server. Ubuntu ranks as arguably the most popular Linux operating system and certainly the one I love, it has way more packages than other distributions. This is also a bias choice because I had experience before with Ubuntu Desktop.

You can’t find a DevOps engineer who doesn’t know Bash Script! It’s a MUST! It’s basically OS level programming, with no string attached with application level restrictions. It’s so powerful that you can do more on OS level, its infinite power for ppl who know how to work around it.

Security is important. It’s a must to follow security checklist on configuring your server like: root user, basic firewall, SSH authentication, security updates, etc. Attackers will often attempt to exploit unpatched flaws or access default accounts, unused pages, unprotected files and directories, etc to gain unauthorized access or knowledge of the system.

Web Server (Nginx)

A web server stores and delivers the content for a website – such as text, images, video, and application data – to clients that request it. The most common type of client is a web browser, which requests data from your website when a user clicks on a link or downloads a document on a page displayed in the browser.

A web server communicates with a web browser using the Hypertext Transfer Protocol (HTTP). The content of most web pages is encoded in Hypertext Markup Language (HTML). The content can be static (for example, text and images) or dynamic (for example, a computed price or the list of items a customer has marked for purchase). To deliver dynamic content, most web servers support server‑side scripting languages to encode business logic into the communication. Commonly supported languages include Active Server Pages (ASP), Javascript, PHP, Python, and Ruby.

Nginx and Apache are two popular web servers. The main difference between Apache and Nginx lies in their design architecture. Apache uses a process-driven approach and creates a new thread for each request. Whereas Nginx uses an event-driven architecture to handle multiple requests within one thread.

Nginx, pronounced like “engine-ex”, is an open-source web server that, since its initial success as a web server, is now also used as a reverse proxy, HTTP cache, and load balancer.

Nginx has a lightweight structure, and much faster architecture than that of Apache, better equipped to serve static content, it uses external module to process dynamic content.

I prefer Nginx because it is significantly lightweight and great for server resources.

CI/CD (CircleCI)

Microservices architecture has pushed DevOps to the forefront. Things used to be manual. Now containerization accelerates DevOps, and they give the ability to deploy quickly. You can automate a lot of tasks and make it easy to move things.

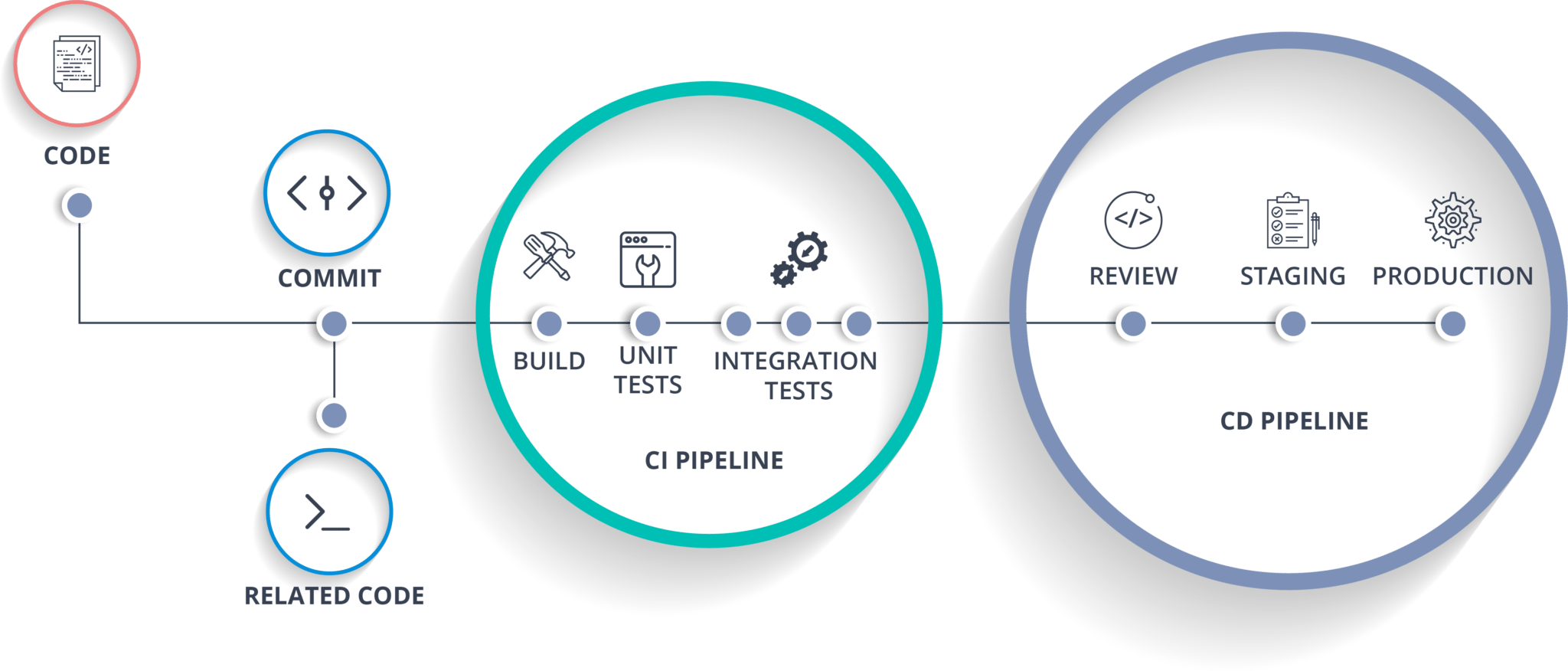

Often, teams struggle to ship software into the customer’s hands due to lack of consistency and excessive manual labor. Continuous integration (CI) and continuous delivery (CD) deliver software to a production environment with speed, safety, and reliability.

Continuous Integration (CI) is a development practice that requires developers to integrate code into a shared repository several times a day. Each check-in is then verified by an automated build, allowing teams to detect problems early. By integrating regularly, you can detect errors quickly, and locate them more easily.

Continuous Delivery (CD) has been around for a while and has evolved by being able to easily automate various elements of deployment. More and more the entire application landscape is replaced with automated platforms. DevOps has moved from no well-defined methodologies to quite a few programmatic applications.

CI/CD Pipeline is the backbone of the modern DevOps environment. It bridges the gap between development and operations teams by automating the building, testing, and deployment of applications.

Once a CI/CD tool is selected, you must make sure that all environment variables are configured outside the application. CI/CD tools allow setting these variables, masking variables such as passwords and account keys, and configuring them at time of deployment for the target environment.

Jenkins, CircleCI, Travis CI are popular tools, each has its own strengths and weaknesses. What CI system to chose? That depends on your needs and the way you are planning to use it.

Jenkins is very popular in enterprise with its rich plugin ecosystem but it’s quite overkill to individuals or small team, it requires dedicated server and considerable time needed for configuration. Jenkins is recommended for the big projects, where you need a lot of customizations that can be done by usage of various plugins.

Travis CI is recommended for cases when you are working on the open-source projects, that should be tested in different environments.

CircleCI is a cloud-based system — no dedicated server required, and you do not need to administrate it. However, it also offers an on-prem solution that allows you to run it in your private cloud or data center. I prefer CircleCI with its simplicity and generous free plan!

Containerization (Docker)

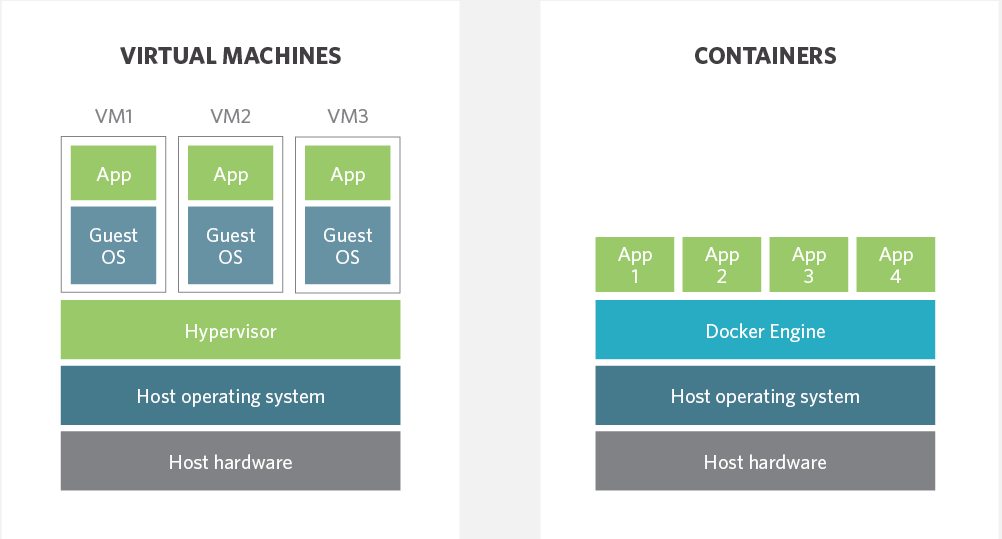

Containerization involves bundling an application together with all of its related configuration files, libraries and dependencies required for it to run in an efficient and bug-free way across different computing environments. It is a major trend in software development and is its adoption will likely grow in both magnitude and speed.

Instead of virtualizing the hardware stack as with the virtual machines approach, containers virtualize at the operating system level, with multiple containers running atop the OS kernel directly. It has following benefits:

-

Consistent Environment: Containers give developers the ability to create predictable environments that are isolated from other applications. Containers can also include software dependencies needed by the application, such as specific versions of programming language runtime and other software libraries.

-

Run Anywhere: Containers are able to run virtually anywhere, greatly easing development and deployment: on Linux, Windows, and Mac operating systems; on virtual machines or bare metal; on a developer’s machine or in data centers on-premises; and of course, in the public cloud

-

Isolation: Containers virtualize CPU, memory, storage, and network resources at the OS-level, providing developers with a sandboxed view of the OS logically isolated from other applications.

Docker surely gets a lot of attention but it is not the only container option out there. Other container runtime environments including CoreOS rkt, Mesos, lxc and others are steadily growing as the market continues to evolve and diversify.

Docker Compose is a tool for defining and running multi-container Docker applications. With Compose, you use a YAML file to configure your application’s services. It’s a perfect choice for small and medium application, it’s deadly simple to manage couple of containers.

Kubernetes makes everything associated with deploying and managing your application easier. Kubernetes automates rollouts and rollbacks, monitoring the health of your services to prevent bad rollouts before things go bad. It’s way more complicated than Docker Compose but necessary to manage huge cluster of applications which need auto scalability and high availability.

Key Takeaways

What I’ve been talking about are minimum requirements for a Fullstack JavaScript Developer to self host his SaaS product on a VPS in the beginning.

As indie maker, I need fast start solution with minium cost and resources. I want to keep the system extremely simple. When the business grows, I’ll add more and more supporting tools like log management service, application monitoring service, infrastructure monitoring service, search service, secret management service, CDN service, etc.

Those kinds of above supporting services are going to consume A LOT of resources if you host them yourself or they’ll cost all your money if you use a cloud solution. The point is to add what’s really needed with the balance between time and resources.

Finally, maintaining a realistic implementation schedule is the key. Full-fledged DevOps solution is doable but should not be over engineered compare to product business status.